Deepfake technology, AI‑driven synthetic media that can swap faces, mimic voices, or alter images, has leapt from niche experiment to global threat. Organizations now see deepfakes used in financial fraud, identity attacks, and political manipulation, while media platforms must scramble to detect manipulated content in real time. As the volume and sophistication of deepfakes climb, the stakes for individuals, businesses, and governments only grow. Below is a data‑driven look at how deepfakes are evolving, and what that means for all of us.

Editor’s Choice

- Deepfake files are projected to reach 8 million in 2025, up from 500,000 in 2023.

- Human detection of high-quality deepfake videos is only 24.5% accurate.

- Deepfakes now account for 40% of all biometric fraud attempts.

- In 2025, 1 in 20 ID verification failures is linked to deepfake usage.

- Some financial security firms report that deepfakes are responsible for approximately 5% of identity verification failures as of early 2025.

- Governments forecast that 8 million deepfake media pieces will be shared in 2025.

Recent Developments

- The U.S. passed the TAKE IT DOWN Act in May 2025, mandating platforms remove nonconsensual intimate deepfake content.

- Deepfake detection tools are stepping up; new systems evaluate mismatches in light, shadows, and audio consistency.

- Researchers released Deepfake‑Eval‑2024, a benchmark of real-world deepfakes. Many detection models saw a 45–50% drop in performance.

- In late 2024, voice phishing jumped 442%, driven by more convincing vocal impersonations.

- Real‑time deepfake manipulation (live video + voice) is emerging, a shift from static or pre-recorded fakes.

- Detection tools are now augmented with watermarking, forensic tracing, and verified metadata flags.

- Dialogue is growing about regulation and consumer awareness, but enforcement lags behind technical capability.

Prevalence and Growth Statistics

- Deepfake content jumped from about 500,000 files in 2023 to a projected 8 million in 2025.

- Between 2019 and 2024, deepfake videos increased by 550%, reaching about 95,820 in 2023.

- In North America, deepfake fraud rose 1,740%.

- In the Asia-Pacific region, deepfake incidents rose by around 1,530% in similar timeframes.

- Deepfakes now contribute to 40% of biometric fraud attempts.

- Globally, fraud attempts overall grew around 21% year-over-year in 2025, with many linked to deepfake methods.

- The detection tool market around deepfake and synthetic media is projected to triple from 2023 to 2026.

- Deepfake fraud incidents constitute about 6.5% of all reported fraud.

Incidents by Year and Region

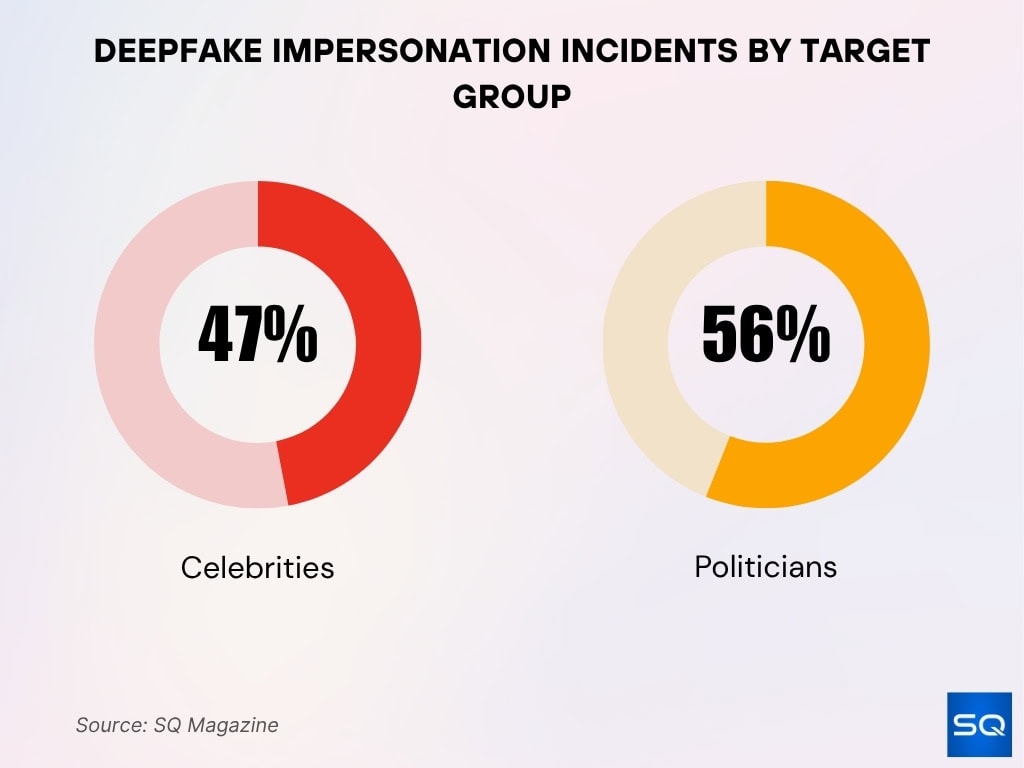

- Celebrities were targeted 47 times in Q1 2025, an 81% jump over the total in 2024.

- Politicians were impersonated 56 times in Q1 2025.

- In Q1 2025, incidents rose 19% over all of 2024.

- In 2025, 38% of reported deepfake incidents occurred in North America, led by the U.S.

- South Korea reported about 297 deepfake sex crime cases in seven months of 2024, nearly double 2021.

- Telegram “nudify” bots in Korea reached about 4 million monthly users by late 2024.

- AI-generated sexual images of Taylor Swift reached 47 million views before removal.

- Cases of political deepfakes stood at 82 across 38 countries from mid-2023 to mid-2024.

Technology Usage and Creation

- Many deepfakes use Generative Adversarial Networks (GANs) or diffusion models, trained on large face and voice datasets to produce convincing outputs.

- A recent study used GANs to detect manipulated payment images with over 95% accuracy, a sign that GANs are both a tool and a countermeasure.

- Voice cloning now often requires just 20–30 seconds of target audio to generate realistic speech.

- Real-time deepfake systems (live video + voice) are increasingly accessible, enabling scams in interactive environments.

- A benchmark showed that standard audio deepfake detectors lost up to 43% performance when exposed to more realistic inputs.

- In audio deepfake detection, error rates (EER) are used to evaluate performance; many models still struggle with adversarial inputs.

- A challenge‑response system reached about 87.7% AUROC on deepfake audio detection when combining human and machine checks.

- Detection models trained on older synthetic data suffer when facing newer deepfakes; many tools fail under “zero‑shot” scenarios.

- Some systems claim 99% accuracy in lab settings, but real‑world robustness remains unproven under adversarial attack.

Deepfake Content Types (Video, Audio, Image)

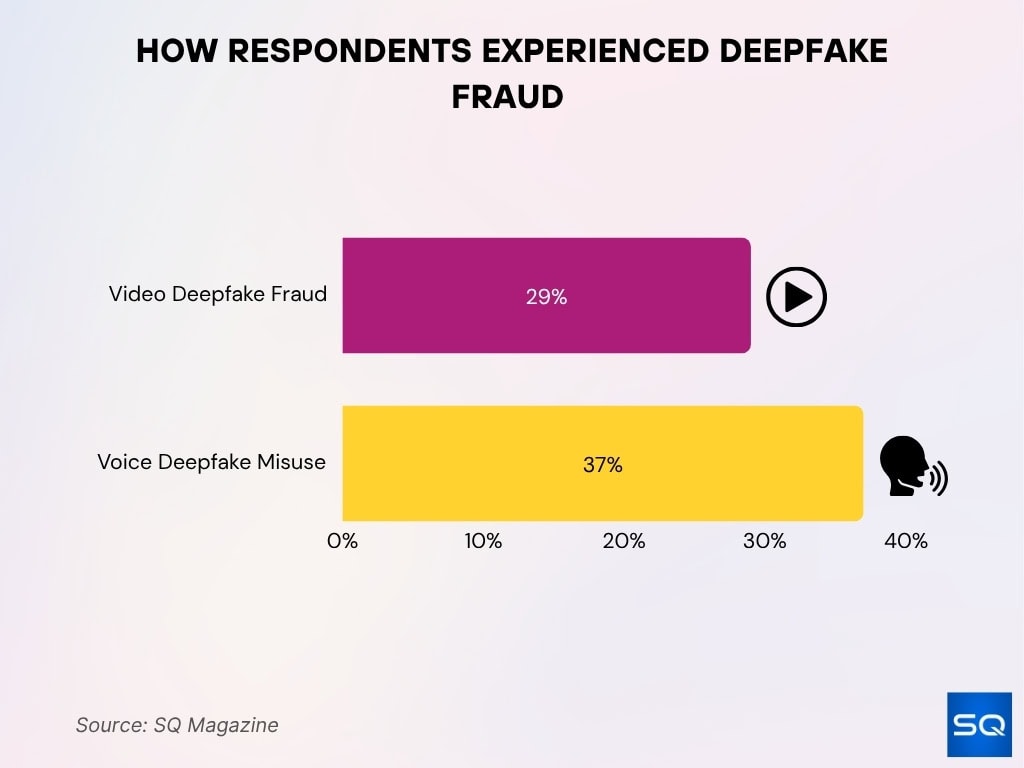

- Survey data, 29% of respondents reported experiencing video deepfake fraud, and 37% had seen voice deepfake misuse.

- Video deepfakes remain the most visible, manipulated face swaps, lip sync, or full-body replacement.

- Audio deepfakes/voice cloning allow impostors to replicate tone, accent, and emotion, used heavily in phishing or impersonation.

- Image deepfakes are used in disinformation, fake endorsements, or fabricated events.

- Researchers classify four categories of deepfakes: audio, video, image, and textual (AI-manipulated text content).

- As of 2023, over 500,000 video and voice deepfakes were shared globally on social media.

- Human accuracy, people identify high-quality deepfake videos only around 24.5% of the time.

- For images, human detection averages about 62% accuracy.

- Only 0.1% of participants across modalities could reliably spot fakes in mixed tests.

Deepfake and Financial Fraud Statistics

- Fraud attempts involving deepfakes have grown 2,137% over three years in some financial institutions.

- Deepfake-based fraud once made up 0.1% of attempts; now they represent ~6.5%.

- The market for fraud detection tools is expanding with demand driven by deepfake threats.

- 49% of businesses globally reported audio and video deepfake fraud incidents by 2024.

- Consumers lost $27.2 billion in 2024 to identity fraud, a 19% increase from the previous year.

- In fraud detection, deepfake risks are cited as among the top AI‑driven threats in cybersecurity analyses.

Key Areas in Society Most Impacted by Deepfakes

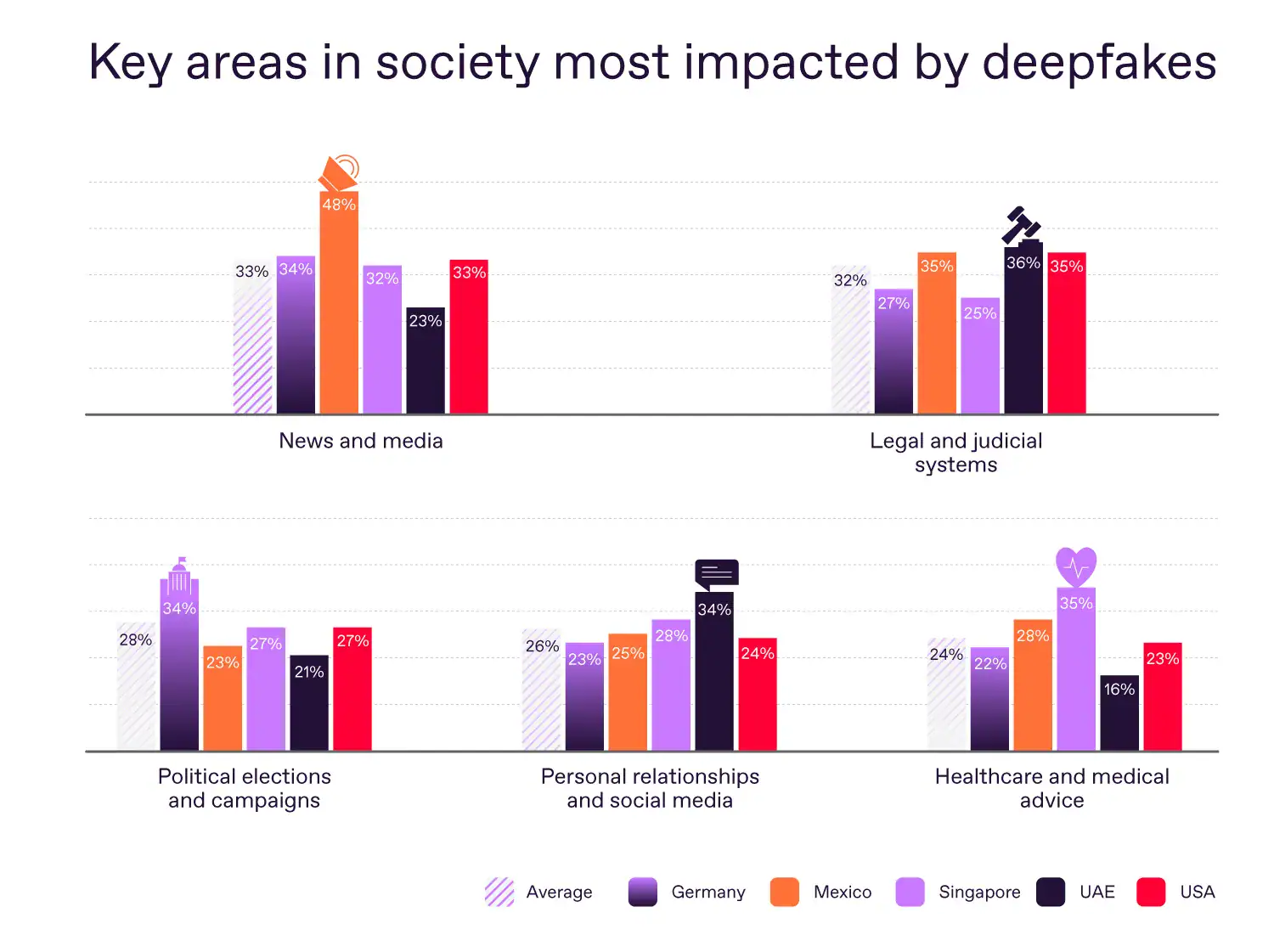

- News and media emerged as the most impacted sector, with Mexico at 48%, far above the global average of 33%, while the UAE reported only 23%.

- Legal and judicial systems face growing risks, especially in the UAE (36%) and the USA (35%), compared to a global average of 32%.

- Political elections and campaigns saw deepfake concerns highest in Germany (34%), reflecting fears over misinformation during voting periods.

- Personal relationships and social media were notably affected in Germany (34%) and the UAE (28%), with 26% average global impact, underscoring personal-level trust issues.

- Healthcare and medical advice were the least impacted domains globally (24% average), yet Singapore (35%) and Mexico (28%) showed significant regional vulnerability to health misinformation.

Phishing and Cybersecurity Threats

- Voice phishing (vishing) is rising; it uses cloned voices for more convincing scams.

- Globally, 1 in 10 adults say they’ve encountered an AI voice scam, and 77% of those reported losses.

- Over 50% of adults share voice or audio data weekly, providing raw material for voice cloning.

- In the U.S., deepfake and impersonation scams have infiltrated call centers, corporate security, and financial channels.

- Attackers can bypass voice authentication systems using synthetic voices trained on minimal data.

- Many AI‑powered phishing tools now integrate deepfake capabilities to personalize attacks.

- Deepfake content can be used to mimic an executive or public figure, pushing spoofed instructions to employees or partners.

- Cyber risk assessments now list deepfake attacks as one of the top three AI threats.

Impact on Businesses and Organizations

- 49% of businesses globally reported audio or video deepfake incidents by 2024.

- Many firms now lose time, reputation, or customer trust dealing with manipulated media claims or fraudulent responses.

- Deepfake attacks against enterprises target HR, finance, legal, and executive communications.

- Some organizations now allocate budgets specifically for deepfake detection tools and media forensics.

- Losses from fraud force firms to invest heavily in identity verification, even for low-margin transactions.

- Regulatory compliance, especially in finance, healthcare, or defense, compounds the cost of potential deepfake exposure.

- Several businesses have publicly acknowledged deepfake attempts used to impersonate executives or clients.

- In Australia, 20% of businesses reported receiving deepfake threats, 12% admitted being deceived.

Company Confidence in Detecting Deepfakes

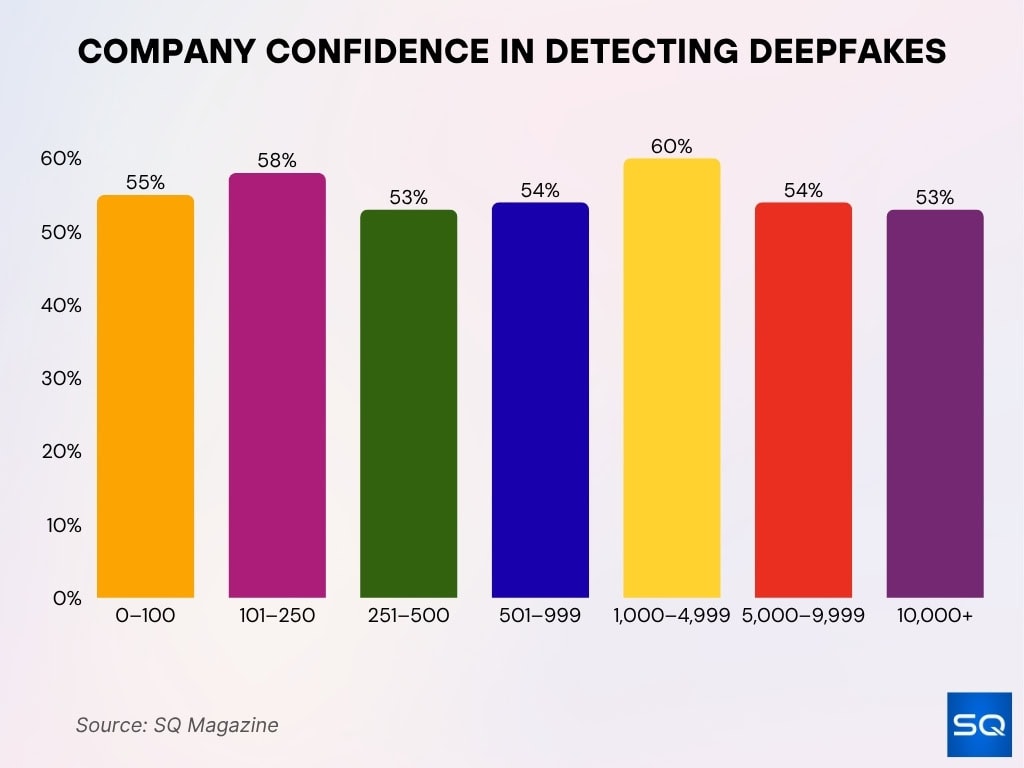

- 60% of companies with 1,000–4,999 employees expressed the highest confidence in their current deepfake detection methods.

- Smaller firms with 101–250 employees showed strong confidence at 58%, indicating effective adoption of detection tools despite limited resources.

- Only 53% of mid-sized organizations (251–500 employees) felt confident, reflecting possible gaps in detection capabilities or training.

- Large enterprises with 10,000+ employees reported 53% confidence, suggesting scale does not necessarily equate to stronger detection performance.

- On average, companies across all sizes maintained a confidence range of 53–60%, highlighting a moderate but uneven global readiness against deepfake threats.

Effects on Consumers and the Public

- 60% of consumers say they encountered a deepfake video in the past year.

- Only 15% claim they’ve never seen a deepfake video.

- Many consumers now distrust video or audio evidence, making it harder to respond in a crisis.

- Some have fallen victim to romantic scams, phishing, or extortion involving synthetic media.

- Humans claim about 73% accuracy on audio authenticity, but are easily fooled in detail.

- Humans struggle to detect short AI-generated voices; for clips under 20 seconds, correct identification is often under 60%.

- In mixed tests, only 0.1% of participants identified fakes accurately across all media types.

- Public confidence in media, journalism, and court evidence is threatened by possible deepfake misuse.

Scams and Social Engineering

- Scammers use celebrity likenesses to push bogus products or financial ploys, one scam using Gisele Bündchen’s deepfake generated millions.

- Deepfake-enabled “AI romance fraud” is growing, where a synthetic persona builds trust over time.

- Fraud rings in Asia in Q1 2025 had 87 AI deepfake scam operations dismantled.

- Deepfakes are used to fake urgent corporate instructions, like payments to fraudsters posing as executives.

- In 2024, 6,179 people in the U.K. and Canada lost £27 million in a crypto deepfake scam.

- Some attacks use video of public figures to legitimize fraudulent investment or donation appeals.

- AI models now synthesize personalized audio and video to match victim profiles, making social engineering harder to distinguish.

- Deepfake scams increasingly combine multiple modalities (video and audio) to reinforce deception.

Detection and Accuracy Challenges

- Many detectors perform well on “seen” data but fail on newer or adversarial deepfakes.

- Audio deepfake detectors have achieved about 88.9% accuracy in controlled settings, but degrade under adversarial conditions.

- Some tools claim 99% accuracy, but those claims often exclude stealthy or high-quality modern fakes.

- Real-world robustness is weakening; tiny perturbations can reduce detection accuracy.

- Human evaluators remain inconsistent; studies show variable detection rates across participants.

- In audio, equal error rates remain a key metric; many models struggle in noisy environments.

- Benchmark datasets often lack environmental noise or cross-modality variation.

- Combining human intuition and machine detection shows promise, but scaling that is nontrivial.

Human Ability to Detect Deepfakes

- Participants detect real stimuli about 68.1% of the time, but struggle more with fakes.

- Many studies find that humans do worse than chance when detecting deepfakes across mixed media.

- In audio tests, humans often perform around 73% in controlled settings, but accuracy drops with clip brevity or complexity.

- For clips shorter than 20 seconds, identification accuracy falls below 60%.

- Only 0.1% of subjects across modalities correctly identified real vs fake across images, audio, and video.

- Some training or exposure helps, but gains are limited when confronted with novel methods.

- Under cognitive load or stress, detection performance degrades further.

Voice Cloning and AI‑Generated Audio Statistics

- 1 in 10 people report receiving a message from a voice clone, and 77% of those lost money.

- Among those victims, 36% lost $500–$3,000, and 7% lost $5,000–$15,000.

- Many adults, 53%, share voice or audio data online weekly, fueling cloning potential.

- Voice deepfakes rose 680% over the previous year.

- Voice cloning now mimics emotional nuance and accent, not just pitch or tone.

- In deepfake voice detection benchmarks, top EERs are often above 13%, showing room for improvement.

- A real‑world audio detector claims 96% accuracy scanning media for synthetic speech.

- Studies show humans mistake AI voices for real ones about 80% of the time in short clips.

Frequently Asked Questions (FAQs)

Deepfake‑enabled fraud resulted in over $200 million in losses in Q1 2025.

In 2025, 1 in 20 identity verification failures is linked to deepfakes (5%).

Fraud attempts using deepfakes have increased by 2,137% over the last three years.

60% of consumers report having encountered a deepfake video within the last year.

Conclusion

Deepfakes are no longer a speculative issue; they are a pervasive, evolving threat touching government, business, and everyday life. As financial losses surge, detection tools struggle to keep pace, and human ability to discern fakes falls short, organizations must adopt layered defenses, combining advanced detection, governance, media authentication, and user education. The scale and sophistication of misuse demand urgency. In this report, we’ve laid out the landscape, the risks, and the need for collective action to stay ahead of synthetic deception.