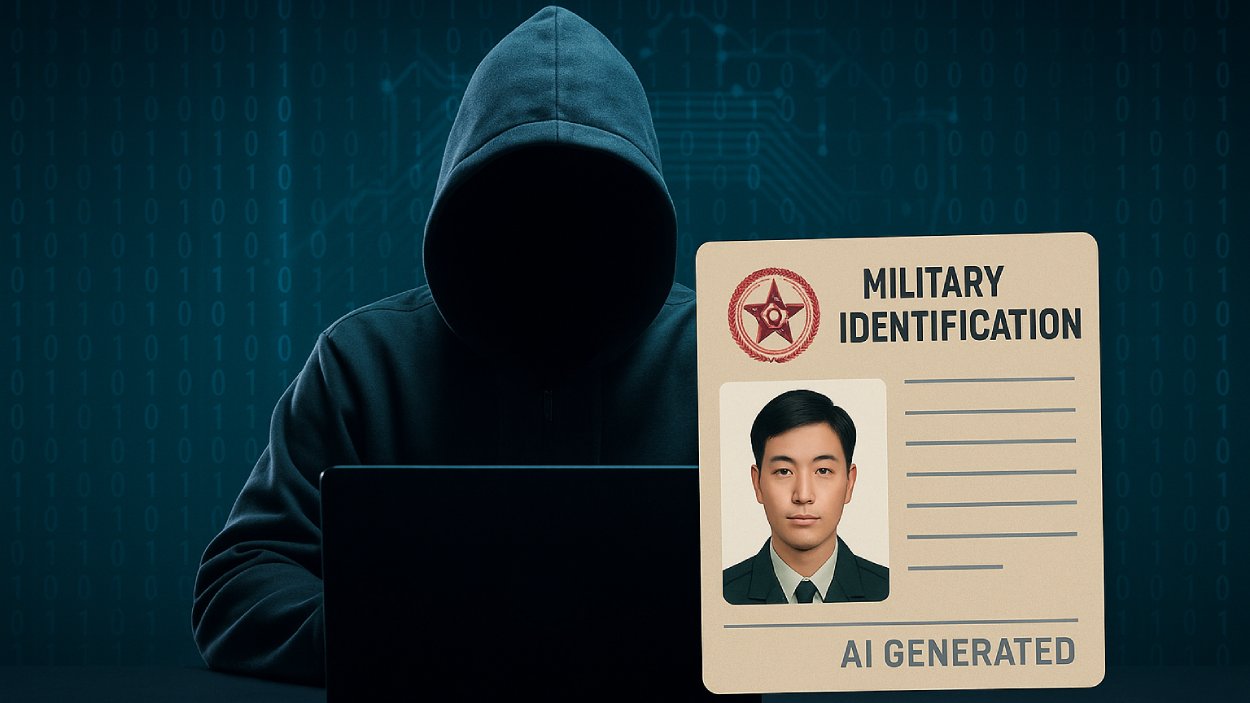

A suspected North Korean hacking group is using artificial intelligence to create deepfake military IDs, targeting South Korean institutions with a sophisticated phishing scam.

Quick Summary – TLDR:

- North Korea-linked group Kimsuky used ChatGPT to generate fake military IDs in a phishing campaign targeting South Korean defense personnel.

- The group attempted to trick journalists, researchers and human rights activists using AI-crafted emails that mimicked military correspondence.

- Cybersecurity firm Genians identified the attack, warning it shows growing misuse of AI in state-sponsored espionage.

- Hackers bypassed AI restrictions by cleverly tweaking prompts to get legitimate-looking ID mockups from ChatGPT.

What Happened?

A new phishing attack linked to North Korea has been uncovered by South Korean cybersecurity firm Genians. The attackers used ChatGPT to help forge a fake South Korean military identification card as part of a broader social engineering effort. The attack targeted journalists, researchers, human rights activists and even a defense-related institution in South Korea.

Kimsuky used AI deepfake-generated ID cards via ChatGPT to impersonate a South Korean defense institution in an APT spear-phishing attack.https://t.co/15Tpl2AGHT#Kimsuky #ChatGPT #Deepfake pic.twitter.com/hjJuZlcQ53

— CyberWar – 싸워 (@cyberwar_15) September 14, 2025

Kimsuky Group Steps Up With AI Tactics

The cyber-espionage unit known as Kimsuky, believed to be backed by the North Korean government, has reportedly shifted to more advanced tactics using generative AI tools. According to a Genians report published on September 15, the group conducted a phishing campaign in July that involved a fake email requesting review of a military ID draft. The image, allegedly generated using ChatGPT or a similar tool, was crafted to look legitimate and increase the chance of engagement.

- The phishing email was sent from an address ending in .mil.kr, impersonating an official South Korean military domain.

- The attachment contained malware designed to extract data from the recipient’s device.

The real threat, cybersecurity experts say, lies not just in the visual deception but in how AI makes these scams seem personally or professionally relevant. A Genians spokesperson said, “Using an image that aligns with the recipient’s actual work context significantly increases the chance of engagement.”

AI Bypass Tactics Raise Alarm

ChatGPT and similar platforms are programmed to reject requests to create images of government-issued documents like military IDs. However, the attackers managed to circumvent this restriction by requesting mockups for “legitimate” purposes instead of asking for direct copies.

- Genians researchers themselves were able to replicate this bypass during their investigation.

- Hackers are believed to have asked for “sample designs” or “mock ID layouts,” which allowed them to generate the visuals.

This technique marks a significant leap in North Korea’s cyber capabilities. It’s no longer just about malicious code but about crafting entire personas and documents using AI.

Growing Pattern of AI Misuse by North Korean Hackers

This isn’t the first time North Korean operatives have used generative AI tools for deception. Past incidents include:

- PurpleDelta and PurpleBravo, two other North Korea-linked groups, have used AI to modify documents, write code, and translate text.

- Cybersecurity firm found that hackers used Claude to forge résumés and pass job interviews, aiming to get remote jobs in US tech companies.

- OpenAI also confirmed in February that it banned accounts suspected of creating fake recruiting materials with its services.

Mitch Haszard, a threat intelligence analyst at Recorded Future, noted that these groups are leveraging AI not just for deception, but for tool development, scenario planning and recruitment scams.

Cyber Threat at a National Security Level

The use of generative AI in this context raises serious national security concerns. As Genians warns, “AI services are powerful tools for enhancing productivity, but they also represent potential risks when misused.”

The firm urges organizations to enhance their cybersecurity defenses, especially across recruitment, operations and communication channels. Continuous monitoring and awareness training are essential, given how realistic these AI-generated assets have become.

SQ Magazine Takeaway

I find this story deeply unsettling. We often talk about the benefits of AI, but this shows the very real and dangerous flip side. North Korea is not just experimenting with AI tools but they’re using them to scale up their espionage operations, trick professionals, and possibly breach sensitive sectors. What makes it worse is how easy it is to bypass built-in safeguards. If a threat actor can fool a model into making a military ID, what else is on the table? This should be a wake-up call for all organizations to rethink their security assumptions in the age of AI.