Claude, Anthropic’s AI assistant, is now testing a new Chrome extension that lets it browse, click, and take actions for you.

Quick Summary – TLDR:

- Anthropic is testing a Chrome extension called Claude for Chrome that can perform actions inside the browser.

- The pilot is limited to 1,000 users on the Claude Max plan with a waitlist now open.

- The agent can navigate webpages, fill forms, click buttons, and more.

- Strong safety protocols are in place to defend against prompt injection attacks.

What Happened?

Anthropic has rolled out a limited research preview of its new Claude for Chrome extension, giving users the ability to let Claude act directly inside the Chrome browser. The AI agent can view webpages, click on items, and even submit forms, making it a powerful productivity tool. But with great power comes serious risks, and Anthropic is moving cautiously by limiting access to a small group of users while testing its safety systems.

Claude for Chrome: A Research Pilot Begins

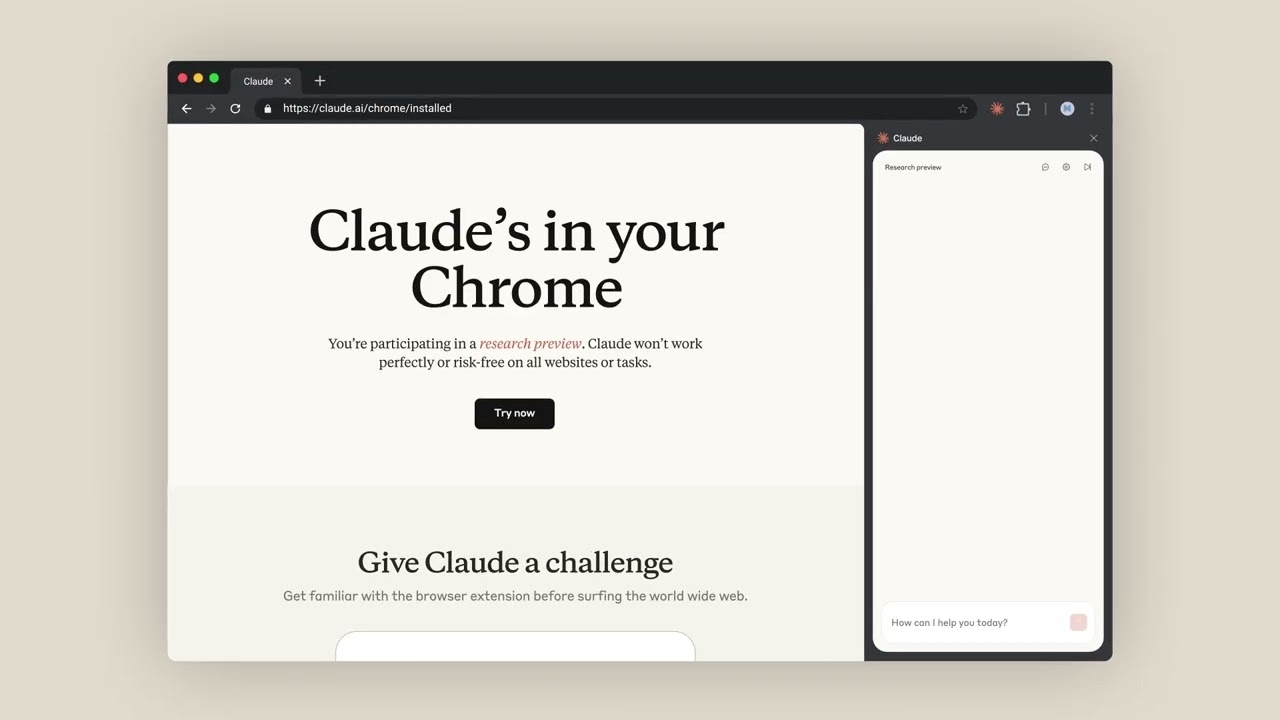

Anthropic’s Claude for Chrome is now live for 1,000 selected users who are subscribed to its Max plan, which ranges from $100 to $200 per month. Users in the pilot can install a Chrome extension that opens a Claude side panel. From there, Claude can interact with whatever is happening inside the browser tab, including clicking buttons, scheduling meetings, drafting emails, and testing websites.

This move aligns with a broader trend among major AI companies. Perplexity recently launched Comet, a dedicated browser powered by AI. OpenAI is rumored to be developing a similar browser, while Google has embedded Gemini into Chrome and is facing a major antitrust case that could result in it selling Chrome altogether.

The Need for Caution: Prompt Injection Risks

While Claude for Chrome offers convenience, it also brings serious security concerns. One of the main risks is prompt injection attacks, where malicious websites embed hidden instructions that trick Claude into taking actions without user consent. These could include deleting emails, making unauthorized purchases, or leaking personal data.

Anthropic has been transparent about these risks. The company ran 123 test cases across 29 different attack scenarios and found that without any safety measures, Claude followed harmful prompts 23.6 percent of the time.

One striking test involved a malicious email that tricked Claude into deleting user emails under the guise of a security cleanup. In that case, Claude acted on the instructions without any user confirmation. Thanks to new defenses, the success rate of such attacks has now been cut to 11.2 percent.

Key Safety Measures Implemented:

- Permission-based controls: Users can approve or restrict access to specific sites.

- High-risk action confirmations: Claude always asks before publishing, purchasing, or sharing private data.

- Category-based site blocking: Financial, adult, and pirated content sites are blocked by default.

- Suspicious pattern detection: Advanced classifiers catch unusual behaviors or instruction patterns.

- Browser-specific protection: Attacks via hidden form fields, URLs, and tab titles are being specifically guarded against.

In a more targeted “challenge” test, which focused on browser-specific attacks like invisible elements in a page’s DOM, attack success rates were reduced from 35.7 percent to 0 percent after deploying new defenses.

Real-World Feedback to Shape Future

Internal testing has shown that Claude can successfully help with routine tasks such as handling calendars, writing emails, and submitting expense reports. However, Anthropic stresses that real-world browsing is more complex and unpredictable. That’s why the company is using this pilot to study authentic usage patterns and unexpected vulnerabilities.

As part of the research phase, Anthropic will use feedback from these early users to refine its safety systems, permission models, and prompt classifiers. The goal is to get attack success rates as close to zero as possible before a wider rollout.

SQ Magazine Takeaway

I think this is a smart and measured move by Anthropic. AI agents in browsers are clearly the next big thing, but they come with some real dangers. It’s encouraging to see a company take those risks seriously, rather than rushing out flashy features. Claude can really help with day-to-day tasks in the browser but trust is key. And building trust means testing the tech, fixing the flaws, and being upfront about what can go wrong. I’m excited to see how this evolves, but I appreciate the slow and steady approach.