In the spring of 2020, a simple tweet claimed that sipping hot water every 15 minutes could kill the coronavirus. No medical source backed it, yet the post quickly amassed over 150,000 shares. Fast forward to 2025, and we’ve learned that misinformation online is not a bug; it’s a system feature.

Today, social media platforms act as both a stage and amplifier for false content, shaping opinions faster than fact-checkers can intervene. Understanding the scale and reach of this phenomenon is not just a data exercise; it’s critical to how we vote, how we stay safe, and how we engage with the world around us.

Editor’s Choice

- As of Q1 2025, over 72% of internet users globally have encountered misinformation on at least one social platform monthly.

- Facebook and X (formerly Twitter) remain the top channels where misinformation is both most encountered and most shared.

- Around 45% of U.S. adults say they find it difficult to determine whether the information on social media is true or false.

- AI-generated fake content saw a 300% increase from early 2023 to mid-2025, particularly via deepfake videos.

- 1 in 3 Gen Z users admitted to unknowingly sharing misinformation, believing it to be factual at the time of posting.

- YouTube’s algorithm recommended at least one video containing misinformation to 26% of new accounts in an internal simulation test (2025).

- Despite platform efforts, misleading content engagement is only down 9% YoY, suggesting limited success in suppression tactics.

Social Media Platforms: Difficulty in Spotting Fake News

- TikTok ranks highest in perceived difficulty, with 27% of respondents finding it very or somewhat difficult to identify trustworthy news.

- X (formerly Twitter) follows closely, with 24% saying it’s hard to distinguish real from fake news, and 35% feeling neutral.

- On Facebook, 21% of users report difficulty, while 51% find it easy to identify credible news, a notable split in perception.

- Instagram shows similar results, with 20% finding it difficult and 49% feeling it’s very/somewhat easy to assess news credibility.

- LinkedIn is seen as more neutral, with a relatively high 41% saying they neither trust nor distrust the content, and only 18% finding it difficult.

- On WhatsApp, 17% struggle with fake news detection, while 51% say it’s easy to identify the truth.

- YouTube appears more trustworthy, with only 17% finding it hard and 54%, the second-highest, rating it easy.

- Google Search stands out as the most trusted platform, with just 13% facing difficulty and 60% saying it’s easy to spot reliable information.

Global Prevalence of Misinformation on Social Media

- In 2025, over 4.8 billion social media users worldwide will be exposed to some form of misinformation.

- The Asia-Pacific region shows the highest prevalence, with 83% of users reporting regular exposure to misinformation.

- In sub-Saharan Africa, health-related misinformation saw a 75% increase, largely due to emerging epidemics and low digital literacy.

- The European Commission’s 2025 report flagged 19% of political content shared across Facebook, Instagram, and X as partially or entirely false.

- In Brazil, misinformation around elections jumped by 27% in early 2025, following the rise of bot-powered content farms.

- India continues to be a misinformation hotspot, with over 350 million users engaging with false or unverified content every month.

- Among OECD nations, Canada and Germany recorded the lowest misinformation exposure rates, unwith der 34% of users reporting seeing it weekly.

Most Affected Platforms by Misinformation

- Facebook continues to be the most affected, with 52% of users reporting exposure to misinformation weekly.

- TikTok, especially among users under 25, shows the fastest-growing rate of misinformation dissemination.

- WhatsApp groups in Latin America and Southeast Asia are primary vectors for viral hoaxes and political falsehoods.

- Telegram, often lauded for privacy, also enables unregulated misinformation sharing within closed groups and channels.

- YouTube Shorts, introduced to rival TikTok, became a top vector for health misinformation, with 21% of viral clips flagged for inaccuracy.

- Despite content warnings, Instagram Stories are twice as likely to spread falsehoods compared to static posts.

- Reddit’s misinformation reports rose by 17%, with community moderators flagging concerns about AI-generated content in r/worldnews.

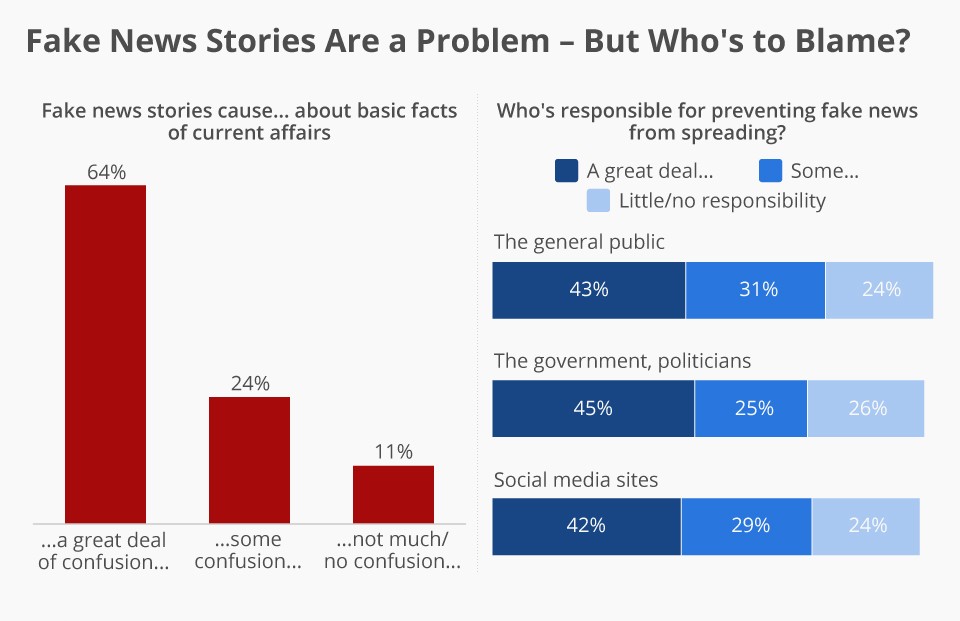

Fake News Confusion & Responsibility: Key Public Perceptions

- 64% of people say fake news causes a great deal of confusion about current events.

- 24% believe fake news creates some confusion in understanding news.

- 11% feel fake news causes little or no confusion.

- 43% say the general public bears a great deal of responsibility for preventing fake news.

- 31% think the public holds some responsibility in combating fake news.

- 24% believe the public has little or no responsibility for stopping fake news.

- 45% believe government and politicians carry a great deal of responsibility for preventing fake news.

- 25% say the government has some responsibility in controlling fake news.

- 26% feel the government has little or no responsibility.

- 42% believe social media sites have a great deal of responsibility in stopping fake news.

- 29% think social media companies hold some responsibility.

- 24% feel social media has little or no responsibility in the matter.

Demographic Breakdown of Misinformation Susceptibility

- Older adults (65+) remain the most susceptible group, with 61% unable to consistently identify false content across platforms.

- Teenagers (13–17) saw a 24% spike in exposure to misinformation, especially via meme-driven formats on TikTok and Snapchat.

- College-educated users are 30% more likely to question misinformation than those without a post-secondary education.

- Rural users in the U.S. are 2x more likely to engage with misinformation than urban users, driven by echo chamber effects.

- Non-native English speakers encounter a higher share of poorly translated or culturally misleading misinformation, especially on YouTube.

- Men are slightly more prone to believe conspiracy content, while women are more likely to engage with health-related falsehoods.

- In 2025, users aged 30–45 reported the greatest increase in trust toward misinformation accounts, especially in the context of finance and investment.

Misinformation Spread During Major Events (Elections, Pandemics, Wars)

- During the 2024 U.S. elections, misinformation posts on X surged by nearly 240% in the 48 hours before and after Election Day.

- In Ukraine, nearly 1 in 4 social media users were exposed to state-sponsored disinformation during the ongoing conflict, according to independent audits.

- COVID-19 misinformation may have tapered, but new pandemic hoaxes about synthetic viruses reached 9 million shares across Meta platforms in 2025.

- In Indonesia’s 2024 presidential election, at least 29% of political posts on TikTok were found to contain fabricated quotes or manipulated videos.

- During the 2025 Israeli-Gaza escalation, over 13,000 AI-generated visuals were identified across social media within the first week.

- False health claims during the Marburg virus scare in Africa saw a 70% rise in WhatsApp chain messages, creating a panic-buying effect.

- Around 47% of misinformation during crises originates from anonymous or bot accounts, making source-tracking difficult for platforms.

- Following global climate disasters, including the 2025 Atlantic hurricane season, eco-misinformation posts quadrupled in engagement.

- Election-specific disinformation in emerging democracies (e.g., Nigeria, Bangladesh) increased by 33%, often targeting ethnic groups or voting infrastructure.

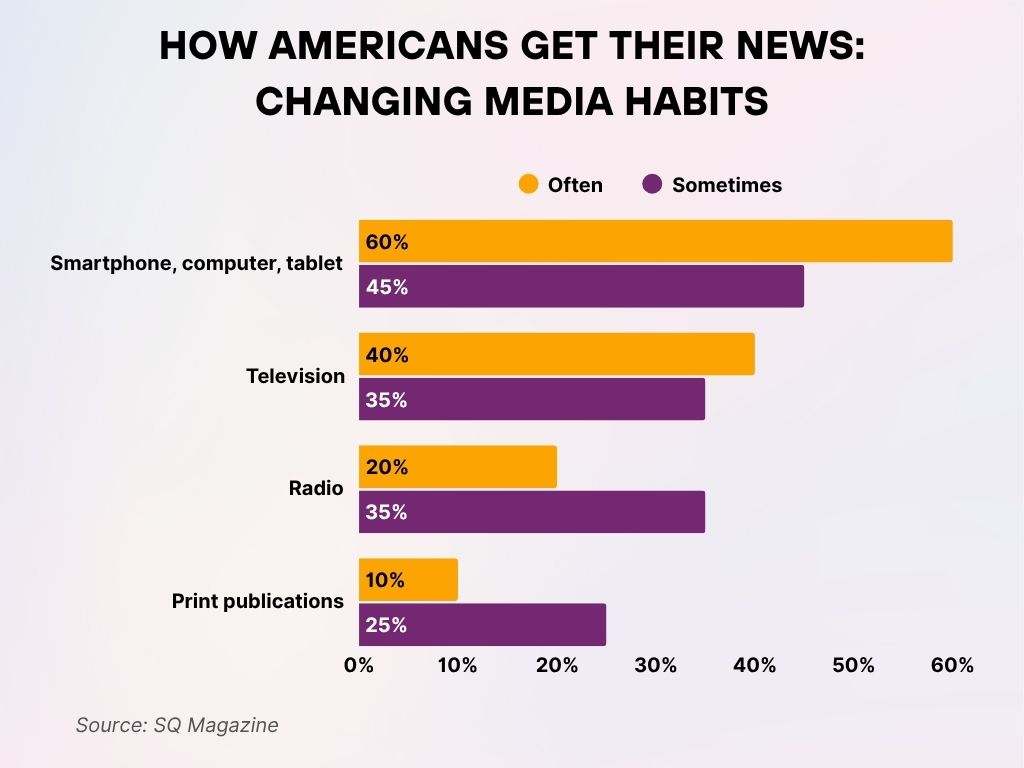

How Americans Get Their News: Changing Media Habits

- 60% of Americans often get their news from a smartphone, computer, or tablet.

- 45% say they sometimes use smartphones or other digital devices for news.

- 40% of respondents often watch television for news. TV remains a strong traditional medium, though it trails digital sources.

- 35% of people sometimes rely on television for news.

- 20% of Americans often listen to the radio for news updates.

- 35% say they sometimes use the radio to get their news.

- 10% of respondents often read print publications for news.

- 25% say they sometimes read newspapers or magazines.

Role of Algorithms in Amplifying False Information

- In 2025, algorithmic amplification accounted for 64% of total engagement on misinformation content across major platforms.

- Facebook’s recommender system continues to boost divisive content, increasing false post impressions by 22% on average.

- YouTube’s algorithm still recommends at least one misleading video per 5 new video watches in unpersonalized sessions.

- On TikTok, misinformation has a 15% higher chance of going viral due to the velocity-based content feed, not verified ranking.

- AI-based personalization on Instagram Reels inadvertently boosted political misinformation by 31% during international elections.

- Hashtag clustering techniques, used by Twitter/X to group trending topics, have led to false narratives trending within minutes.

- Reddit’s upvote system was gamed by misinformation networks, increasing visibility of false headlines in major subreddits by 18%.

- In response, some platforms are testing ‘friction layers’, requiring users to click twice before resharing questionable content.

- Despite these tests, only 6% of false posts are currently demoted by algorithms in real time, an insufficient moderation rate.

Psychological Impact of Consuming Misinformation

- Continuous exposure to misinformation increases anxiety and decision fatigue, noted in 34% of social media users in a 2025 digital wellness survey.

- Among users who consume 5+ hours of social content daily, depressive symptoms were 23% higher if exposed to misinformation regularly.

- False health claims caused misdiagnosis anxiety in nearly 18% of surveyed adults, leading to self-treatment without medical consultation.

- Gen Z users reported feeling “digitally manipulated”, with 41% stating they mistrust even verified sources after repeated misinformation exposure.

- Reinforcement loops, where users are shown similar false content repeatedly, were linked to confirmation bias escalation in 2025 psychology studies.

- Misinformation-heavy users scored 28% lower on trust perception tests compared to those with balanced content exposure.

- False information relating to financial scams created lasting distrust in fintech platforms, affecting 1 in 5 users under 35.

- Exposure to political misinformation was associated with increased polarization and hostility, especially in group discussion environments.

- Cognitive overload caused by rapid contradictory information led to digital withdrawal symptoms in 12% of surveyed daily users.

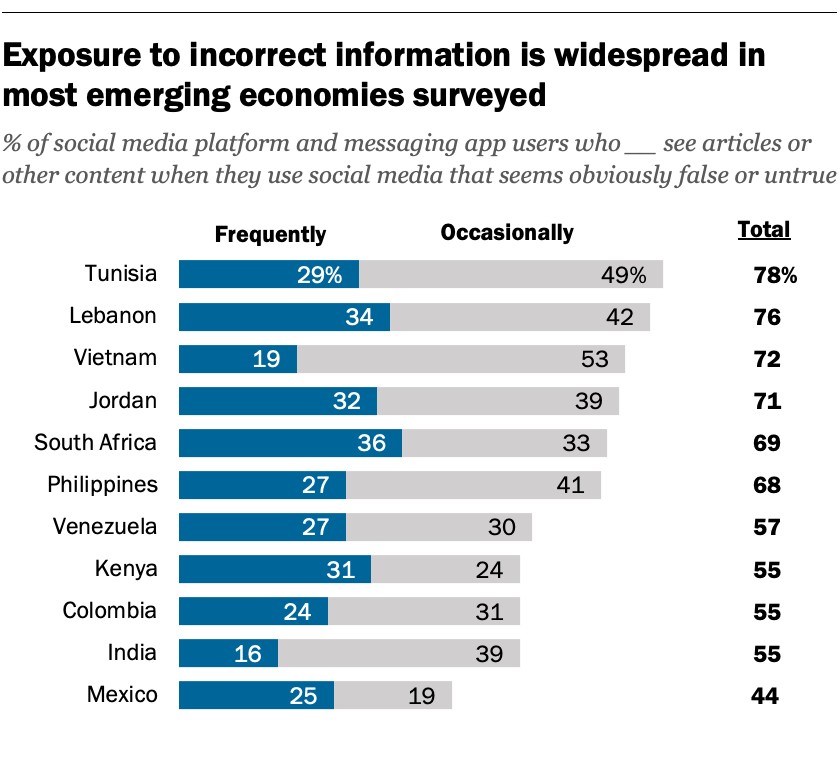

Emerging Economies and Exposure to False Information on Social Media

- Tunisia tops the list with 78% of users encountering false content, 29% frequently, and 49% occasionally.

- In Lebanon, 76% of users report seeing misinformation, 34% frequently, and 42% occasionally.

- Vietnam reports 72% total exposure, with 19% frequently and 53% occasionally seeing false or untrue content.

- Jordan follows closely with 71% of users exposed, 32% frequently, and 39% occasionally.

- South Africa has 69% exposure, 36% frequently, the highest frequent rate across all surveyed.

- In the Philippines, 68% encounter misinformation, 27% frequently and 41% occasionally.

- Venezuela records 57% exposure, 27% frequently and 30% occasionally.

- Kenya, Colombia, and India each show 55% total exposure to misinformation. Kenya stands out with 31% frequent, Colombia with 24% frequent, and India with just 16% frequent, one of the lowest in the group.

- Mexico reports the lowest overall exposure, at 44%, 25 % frequently and 19% occasionally.

Public Awareness and Perception of Social Media Misinformation

- In 2025, 61% of U.S. adults acknowledged that they had “probably” engaged with misinformation in the past 3 months.

- However, only 24% of users felt confident in their ability to distinguish truth from falsehood on platforms like TikTok or X.

- Trust in platform fact-checking labels has declined to below 35%.

- Around 57% of users feel that platforms prioritize engagement over accuracy, believing algorithms are built to reward virality.

- Public concern is highest in the U.S., UK, and India, where over 70% of users express worry about AI-driven fake content.

- Community reporting tools are still underused; only 1 in 10 misinformation posts gets flagged by users in 2025.

- Despite rising exposure, only 19% of users have completed digital media literacy training or awareness modules.

- Over 65% of parents in the U.S. now actively monitor or limit their children’s social media use due to misinformation fears.

- Public perception of misinformation is increasingly nuanced: younger users blame systems, while older users often blame bad actors or specific ideologies.

- 42% of surveyed Americans agree that misinformation is a top national threat, alongside climate change and cyberterrorism.

Efforts by Social Media Platforms to Combat False Content

- Meta introduced an AI fact-checking tool in 2025 that processes over 1 million posts daily, yet its real-time success rate is just 37%.

- TikTok expanded its content labeling system, but 30% of flagged content still remains live for over 48 hours.

- YouTube removed over 11 million videos containing health and election misinformation in the last 12 months.

- Reddit implemented community trust scoring, giving higher visibility to mods and power users, but its effectiveness is still under review.

- X launched “Community Notes 2.0,” enabling real-time annotation of tweets, which cut misinformation spread by 12% within pilot regions.

- WhatsApp launched hyperlocal misinformation alerts in collaboration with local fact-checkers, but it’s only available in 9 countries so far.

- Instagram introduced a “Why Am I Seeing This Post?” explainer to boost transparency and reduce accidental engagement with false content.

- Facebook groups that repeatedly share misinformation are now automatically demoted, reducing their reach by 40%.

- Despite these tools, platform moderation remains under-resourced: the average content moderator now reviews over 1,200 posts/day.

- Critics argue that most efforts are reactionary, not preventive, leading to an arms race with bad actors exploiting new loopholes.

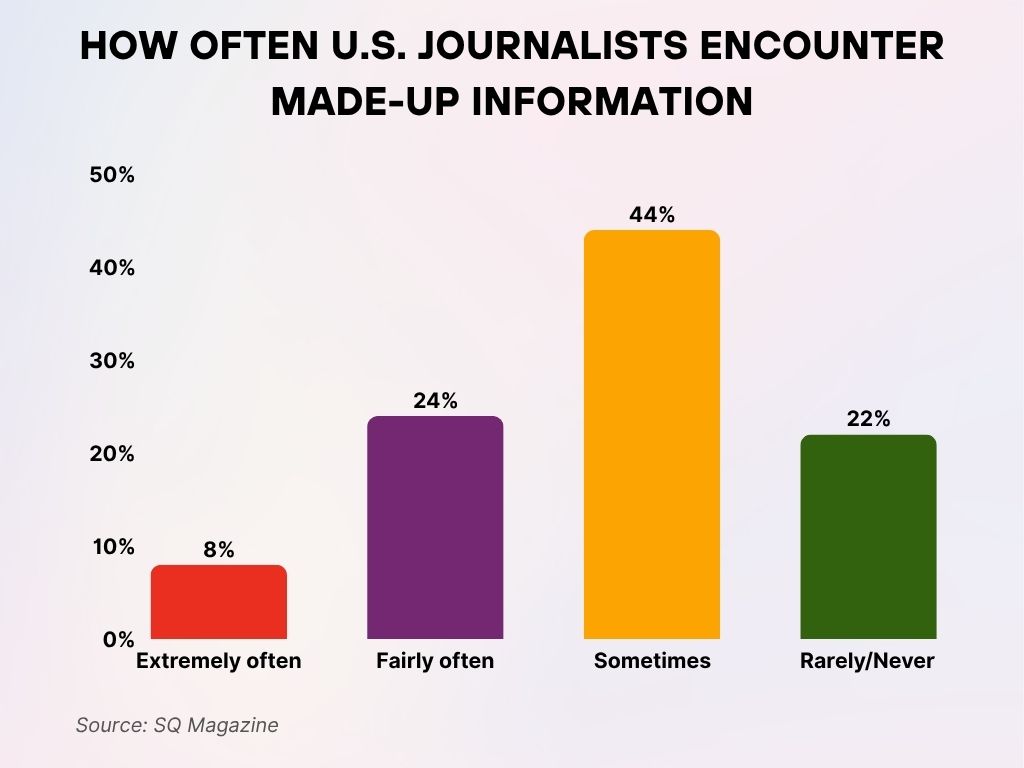

How Often U.S. Journalists Encounter Made-Up Information

- 8% of U.S. journalists say they encounter made-up information extremely often while working on a story.

- 24% report facing false information fairly often during their reporting process.

- 44% of journalists say they encounter made-up information sometimes, the most common response.

- 22% encounter fabricated content rarely or never. While encouraging, this still leaves the majority exposed to some level of misinformation risk in their daily work.

Government and Policy Responses to Online Misinformation

- As of mid-2025, 32 countries have enacted new or amended legislation directly addressing social media misinformation.

- The U.S. Digital Integrity Act, proposed in March 2025, would require platforms with over 50 million users to implement independent third-party audits of algorithmic bias and misinformation amplification.

- The European Union’s DSA (Digital Services Act) is now in full enforcement mode, holding platforms financially liable for failing to remove harmful misinformation, and fines have reached €750 million collectively.

- Brazil’s Truth in Tech Law, passed in early 2025, mandates content traceability for viral posts shared more than 10,000 times.

- In India, new rules issued by MeitY compel digital platforms to verify “high-impact” political content during elections, with enforcement by regional fact-check boards.

- The African Union launched its Misinformation Mitigation Task Force, supporting cross-border collaboration between local fact-checking agencies and platforms.

- Canada’s Online Harms Act, passed in 2024, began enforcement in 2025, targeting algorithmic misinformation spread, especially in child and health domains.

- Over 80% of democratic nations now require transparency reporting from platforms on misinformation takedown performance.

- Critics argue that authoritarian governments are misusing misinformation policies as a pretext for censorship and media suppression.

- Despite regulatory progress, enforcement remains inconsistent, particularly in jurisdictions without dedicated digital watchdogs.

Economic and Societal Costs of Misinformation

- Misinformation is estimated to cost the global economy $89 billion in 2025, factoring in public health missteps, election security costs, and business reputational damage.

- In the U.S. alone, COVID-era misinformation led to an estimated $4.2 billion in unnecessary healthcare spending between 2020 and 2025.

- Stock market manipulation via misinformation posts increased by 21% YoY, with retail investors losing over $2.3 billion in 2025 due to viral “pump-and-dump” misinformation campaigns.

- Corporate brand damage from viral falsehoods has increased legal liability exposure; 1 in 6 PR firms now offer dedicated misinformation monitoring services.

- False job ads and phishing schemes shared via LinkedIn and Facebook resulted in an estimated $920 million in consumer fraud in 2025.

- Disinformation during political campaigns was responsible for delaying legislative outcomes in at least 7 countries, leading to measurable GDP drag.

- Small businesses face disproportionate harm from local rumors and fake reviews, and 23% reported revenue loss due to digital misinformation campaigns.

- Public institutions, especially in healthcare and education, are now budgeting for “misinformation mitigation,” an emerging operational line item.

- The emotional toll on the public is also rising: burnout from misinformation exposure now affects nearly 1 in 4 digital workers.

- Social cohesion metrics declined in nations with higher misinformation exposure rates, highlighting its effect on trust in institutions and neighbors.

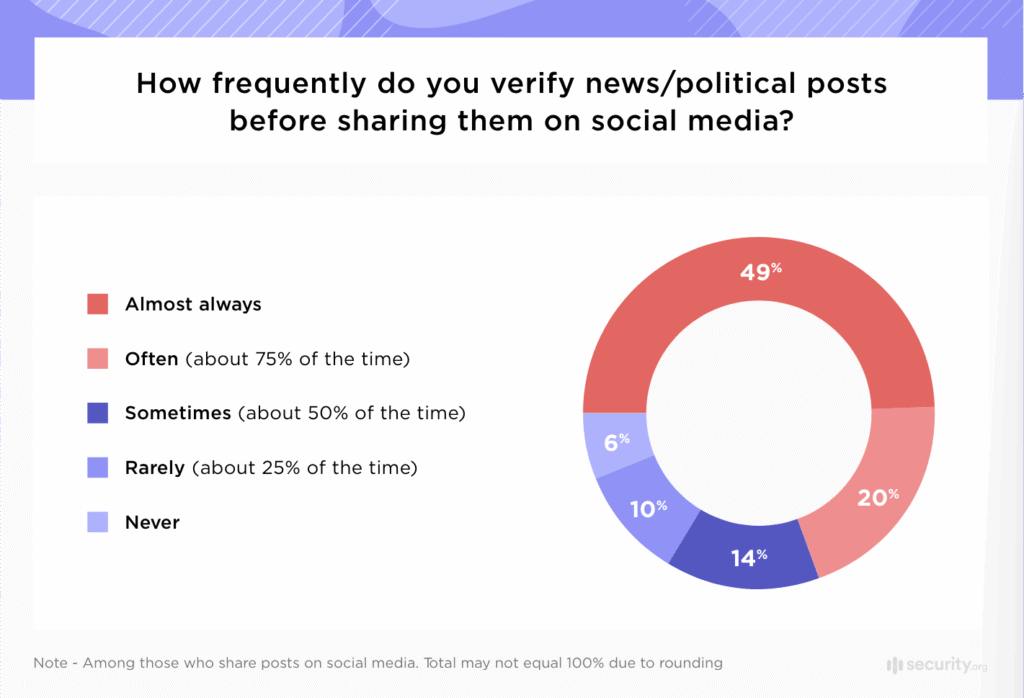

How Often People Verify News or Political Posts Before Sharing (Social Media Behavior Insight)

- 49% of users say they almost always verify news or political posts before sharing. This is the largest group, indicating that nearly half claim to practice consistent fact-checking.

- 20% verify content often, about 75% of the time. These users frequently check facts, but not as habitually as the top group.

- 14% verify sometimes, which means around 50% of the time. This middle ground reflects occasional but inconsistent verification.

- 10% of respondents say they verify rarely, or about 25% of the time. This group is less cautious and may contribute more to unverified sharing.

- 6% admit they never verify news or political posts before sharing. This small segment poses a risk for the spread of misinformation online.

Technological Trends in Detecting and Managing False Information

- AI-powered detection models can now identify false text posts with up to 93% accuracy, though image and video verification still lag at around 67%.

- Multi-modal misinformation detection, combining text, audio, and visual analysis, became standard in enterprise-level content moderation by 2025.

- Startups offering synthetic media forensics tools have raised over $3.1 billion in funding since 2023.

- The use of blockchain technology for content verification is gaining traction, especially in journalism and academic publishing.

- Google DeepMind and Meta AI collaborated on a 2025 open-source dataset aimed at training new misinformation recognition models.

- Browser-based plug-ins using real-time credibility scoring (like “NewsGuard+”) saw a 40% uptick in installations this year.

- AI hallucination detection systems are now being integrated directly into content generation tools to reduce the risk of auto-generated misinformation.

- Advances in real-time video watermarking allow for invisible trace tagging of authentic content, a key method in combating deepfake circulation.

- Mobile platforms now feature on-device misinformation warnings, reducing the need to offload analysis to cloud-based systems, improving speed and privacy.

- Despite progress, many experts warn that detection capabilities are still outpaced by the volume and creativity of misinformation threats.

Recent Developments

- In April 2025, TikTok launched its global “Verified Voices” initiative, prioritizing factual creators in feed algorithms to reduce misinformation reach by 17%.

- Meta announced its new Horizon Guardian AI, trained to intercept misinformation in the metaverse environment before it can be shared.

- Elon Musk’s X introduced NeuralTrust, a user-credibility scoring system based on interaction patterns, raising privacy concerns among researchers.

- WhatsApp now uses language model tagging for forwarded messages, flagging content that mirrors high-risk misinformation patterns.

- New York City piloted a municipal misinformation alert system, pinging users when certain false claims spike in volume locally.

- Reddit enhanced its mod AI toolkit, empowering community leaders to filter hyper-local false content faster and more contextually.

- Apple’s iOS 19 includes an optional “AI-Filtered News Feed” that only shows news from pre-approved trustworthy sources, sparking debate about censorship.

- A viral campaign titled #VerifyBeforeYouShare gained global traction across Instagram, YouTube, and LinkedIn, boosting content awareness initiatives.

- Education systems in Finland and South Korea rolled out new curriculum units focused on AI-era misinformation literacy for middle school students.

- Despite these efforts, the volume of false content on social platforms remains staggeringly high, prompting ongoing scrutiny from international watchdogs.

Conclusion

Misinformation on social media is no longer a fringe problem; it’s a structural flaw affecting our economies, our health systems, our democracies, and our peace of mind. In 2025, the tools for identifying and managing false information are more advanced than ever, but they are still catching up to the sheer speed and sophistication of false content creation.

Real progress will depend on collaboration, not just between governments and platforms, but also with educators, technologists, and everyday users. Whether it’s through smarter regulation, sharper AI tools, or more digitally literate societies, the fight against misinformation is a shared, ongoing responsibility.