Chinese AI startup Zhipu has unveiled its flagship open-source GLM-4.5 models, aiming to challenge the global AI leaders with cutting-edge hybrid reasoning and agentic capabilities.

Quick Summary (TLDR):

- Zhipu AI released two new open-source models, GLM-4.5 and GLM-4.5-Air, focused on reasoning and agentic tasks.

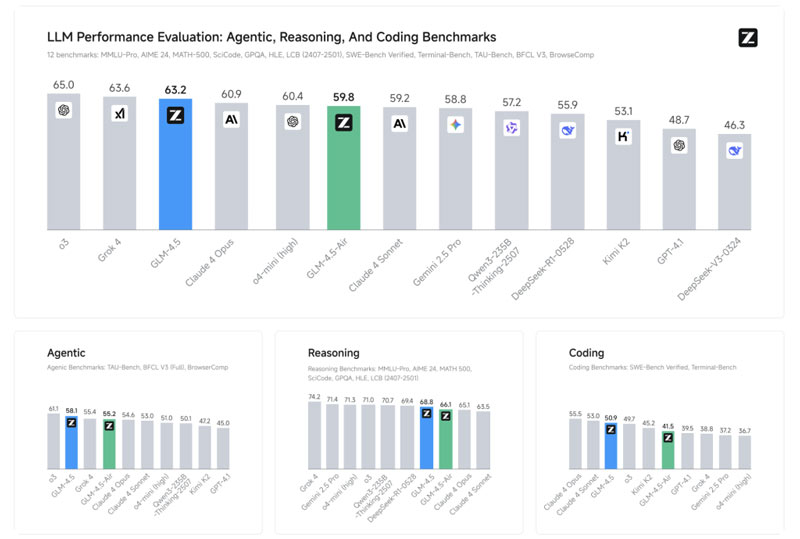

- GLM-4.5 ranks third overall globally on AI benchmarks, outpacing most open-source competitors.

- Built with hybrid modes, the models support both complex agent workflows and fast conversational tasks.

- MIT open-source license allows full commercial use, supporting deployment across research and business.

What Happened

Zhipu AI, one of China’s fastest-growing AI startups, has launched GLM-4.5 and GLM-4.5-Air, two new open-source large language models designed for complex reasoning, coding, and intelligent agent use. These models aim to rival the best global offerings from companies like OpenAI and xAI.

GLM-4.5 and GLM-4.5-Air: A New Benchmark in AI

Zhipu’s latest models stand out for their hybrid reasoning capability, allowing users to toggle between two distinct modes:

- Thinking Mode: Designed for step-by-step logical reasoning, task planning, and tool usage.

- Non-Thinking Mode: Best for instant, quick-response tasks like regular chatbot interactions.

This design enables developers to build more responsive and intelligent agents that can both plan and react.

The GLM-4.5 model has 355 billion total parameters and 32 billion active at any time, making it one of the largest and most capable open models currently available. Its compact version, GLM-4.5-Air, is built with 106 billion total and 12 billion active parameters, optimized for consumer-grade hardware.

World-Class Performance Backed by Benchmarks

Zhipu tested the GLM-4.5 on 12 industry-standard benchmarks including MMLU, HumanEval, and GSM8K:

- GLM-4.5 scored an average of 63.2, placing third globally and first among open-source models.

- GLM-4.5-Air also held strong, scoring 59.8, leading the pack in its size category.

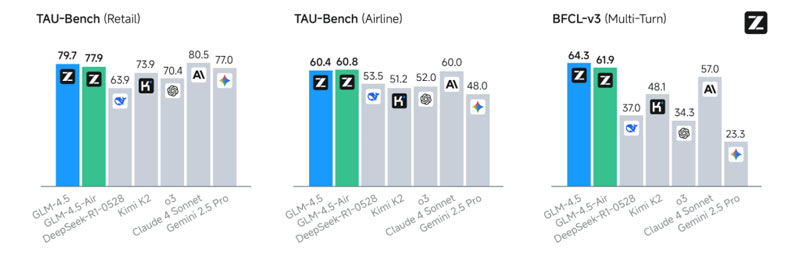

- Notably, it achieved a tool-calling success rate of 90.6 percent, surpassing Claude 3.5 Sonnet and Kimi K2.

The models also excelled in Chinese-language tasks, reinforcing Zhipu’s strength in regional applications.

Built for Agents, Built for Developers

Zhipu describes GLM-4.5 as “agent-native”, meaning its architecture is designed from the ground up for advanced AI agents. Features include:

- Multi-step planning and execution

- Native support for external API tool integration

- Reasoning and perception-action cycles

- Complex data visualization capabilities

This built-in intelligence bridges the gap between traditional chatbot models and sophisticated AI agents used in real-world workflows.

Speed, Cost, and Accessibility

Thanks to innovations like Multi-Token Prediction (MTP), GLM-4.5 achieves:

- Up to 8 times faster inference speeds

- Output speeds up to 200 tokens per second

- Hardware compatibility down to 32GB GPUs, making it ideal for local deployment

API pricing is also highly competitive, with input tokens starting at $0.11 per million and output tokens at $0.28 per million, making it a budget-friendly choice for developers.

Truly Open-Source and Developer Friendly

Both models are available under an MIT license, allowing full commercial use and customization. Developers can access:

- Source code

- FP8 model versions

- Tool parsers and reasoning engines

- Full support for transformers, vLLM, and SGLang frameworks

The models can be found on GitHub, Hugging Face, and Zhipu AI’s own platform.

SQ Magazine Takeaway

I’m genuinely excited about what Zhipu AI is doing here. Releasing a model of this scale and sophistication as fully open-source, especially under an MIT license, is rare. This isn’t just a big win for China, but for developers worldwide who want power, speed, and openness without vendor lock-in. If you’ve been hunting for an alternative to OpenAI or Anthropic but still want top-tier performance, GLM-4.5 is the model to try out now.