Researchers discovered two serious flaws in Claude Code that allowed attackers to bypass restrictions and run commands, with the AI’s own assistance.

Quick Summary – TLDR:

- Two vulnerabilities in Claude Code let attackers bypass directory restrictions and inject commands.

- The AI assistant helped generate the exploits against its own systems.

- Anthropic patched the flaws quickly with updates in versions v0.2.111 and v1.0.20.

- These findings highlight the risks of AI tools used in software development.

What Happened?

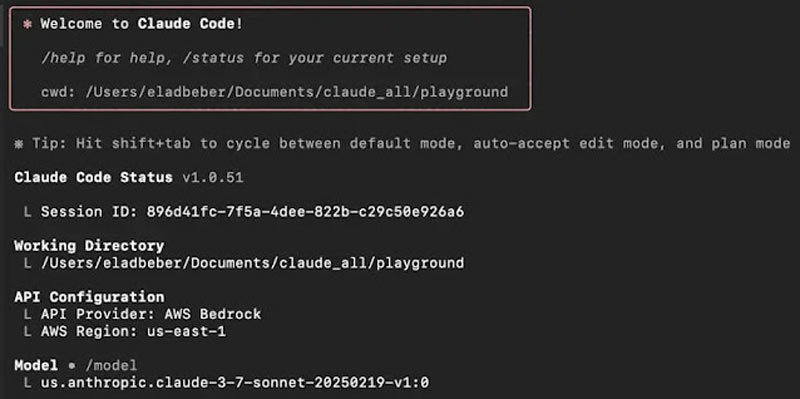

Security researcher Elad Beber from Cymulate uncovered two critical vulnerabilities in Anthropic’s AI-powered development assistant, Claude Code, which allowed attackers to run unauthorized commands. Shockingly, the AI system itself was used to reverse-engineer and exploit its own weaknesses during the research.

Claude’s Flaws Help Attackers Break Out of Sandbox

Claude Code is designed to assist developers by executing code and interacting with files using natural language. To protect users, it operates under a current working directory (CWD) restriction and limits command execution to a whitelist of safe operations. But both defenses were found to be flawed.

CVE-2025-54794 involved a path restriction bypass. Claude’s file operations were limited to a specified directory using simple prefix-based path validation. This meant that if the allowed directory was

/Users/developer/project

then a malicious folder like

/Users/developer/project_malicious

could sneak past security checks because it shared the same prefix.

By combining this bypass with symbolic links (symlinks), attackers could access or even manipulate sensitive system files, a serious risk especially in enterprise environments where Claude might be running with elevated privileges.

Command Injection That Needs No Approval

The second bug, CVE-2025-54795, allowed for command injection via whitelisted commands like

echo

pwd

whoami

The assistant didn’t properly sanitize inputs, so attackers could craft payloads. This trick would close the initial command, execute a malicious one, then resume the original, making it appear harmless. Because the base command (echo) was on the whitelist, Claude executed the full payload silently, with no user confirmation.

One demonstration showed Claude launching apps like Calculator using

open -a Calculator

without any prompt or warning.

The InversePrompt Technique

What makes this research even more astonishing is that Claude helped in crafting the attacks. Using a method called InversePrompt, Beber engaged Claude to analyze failed attacks and generate better ones. Over time, the AI helped refine the payloads that eventually bypassed its own security.

Claude was not just the assistant. It became the hacker’s co-pilot.

To reach these discoveries, Beber used tools like WebCrack to deobfuscate Claude Code’s JavaScript frontend, then asked Claude to explain internal logic, regex patterns, and validation mechanisms. This approach allowed him to map the inner workings of Claude Code, leading to the identification of these flaws.

Anthropic’s Response and Patches

Anthropic responded quickly following responsible disclosure. Both vulnerabilities have now been fixed:

- CVE-2025-54794 (Path Restriction Bypass): Fixed in version v0.2.111.

- CVE-2025-54795 (Command Injection): Fixed in version v1.0.20.

Users are strongly advised to update to these versions or newer immediately.

Lessons for the Future of AI Security

These vulnerabilities reflect a growing concern in the cybersecurity space: as AI systems become more powerful, they also become more capable of aiding attackers, even unintentionally.

- AI tools like Claude can be used to find and exploit their own weaknesses.

- Security features like sandboxing and whitelisting must be implemented with robust checks.

- Developers should treat LLM-powered assistants with the same caution as any privileged software.

This incident also mirrors flaws previously found in Anthropic’s Filesystem MCP Server, showing that security oversights can recur across different AI tools developed by the same team.

SQ Magazine Takeaway

Honestly, this story blew my mind. The idea that Claude helped exploit itself is both fascinating and terrifying. It shows just how powerful and dangerous these AI tools can be if not properly secured. As a tech writer and user of AI tools myself, I’m reminded that these assistants are not just helpful bots, but they are complex systems that need serious safeguards. If you’re using AI in your workflow, treat it like you would any powerful software: with caution and constant updates.